Using R and RStudio for data science on Amazon SageMaker

Most data scientists learn R during their academic careers and heavily use the open-source RStudio desktop IDE as they learn. When moving to a corporate environment, they struggle to find an appropriate environment to continue using R, and are constrained to continue using the Open Source Desktop version.

With the RStudio Pro IDE now available through Amazon SageMaker, data scientists can utilize R with Amazon’s cloud capabilities. This integration provides a great starting point for data science users to scale their workflows in the cloud.

RStudio Pro now available on SageMaker

Users can now access the RStudio Pro IDE in SageMaker, which along with all the awesome features of the desktop version, also provides enterprise features to help R users do better work. Users can use multiple sessions simultaneously, use different versions of R, connect to different data sources and much more.

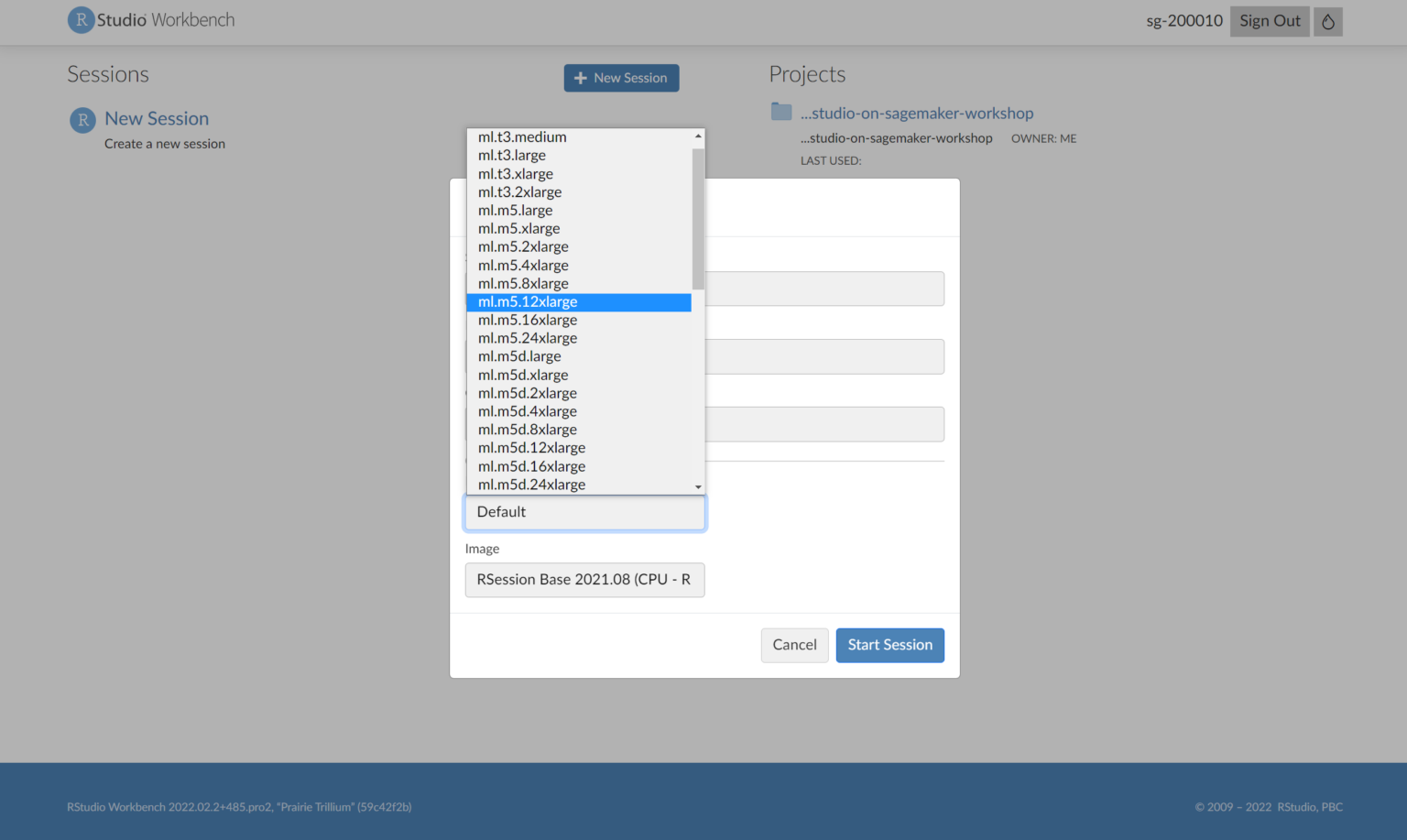

Choose a flexible development environment

The SageMaker environment allows RStudio users to choose different EC2 instances for each session, which provides the option to run different workflows in different environments. For example, if you need a quick analysis of a small dataset, you can choose a smaller-sized EC2 instance like t2.medium. And if simultaneously you want to train a machine learning model using millions of rows of data, you can do that with a larger-sized instance like t2.xxlarge.

Interacting with AWS resources in R made simple

A major advantage of this integration is simplified access to AWS resources like S3 within your R code. The paws R package requires AWS access credentials to be stored in the environment. With RStudio running on an AWS environment in SageMaker, AWS credentials are already available in the session and can be used to access data in S3.

## Working with SageMaker Python SDK

# Connecting to SageMaker

library(reticulate)

sagemaker <- import('sagemaker')

session <- sagemaker$Session()

bucket <- session$default_bucket()

role_arn <- sagemaker$get_execution_role()

# Uploading data

s3_train <- session$upload_data(path = 'dataset/churn_train.csv',

bucket = bucket, key_prefix = 'r_example/data')

s3_valid <- session$upload_data(path = 'dataset/churn_valid.csv',

bucket = bucket, key_prefix = 'r_example/data')

s3_test <- session$upload_data(path = 'dataset/churn_test.csv',

bucket = bucket, key_prefix = 'r_example/data')

# Downloading data

# Create paws s3 object

s3 <- paws::s3()

s3_download <- s3$get_object(

Bucket = bucket,

Key = 'r_example/data/churn_train.csv'

)

require(magrittr)

s3_download$Body %>% rawToChar %>% read.csv(text = .)

Build ML pipelines through RStudio

The SageMaker service itself provides access to various pre-built models, and users of RStudio can now utilize this by accessing the SageMaker API in their R code. Through this integration, R users can now directly interact with SageMaker and build better sophisticated ML models.

Another way to interact with the SageMaker service is available through the vetiver R package. Any vetiver model API can now be directly deployed on SageMaker and exposed to other services consuming the modeling results.

Learn how to get started with RStudio on SageMaker

Want to learn more about how to get started with RStudio on Amazon SageMaker? On June 6th at 11am ET, Posit team members will show you how to analyze your organization’s data in S3 and train ML models with RStudio on SageMaker. Add the YouTube Premier to your calendar here.