2025-11-21 AI Newsletter

External news

Model releases

There have been several notable model releases in the past few weeks.

Most recently, Google released Gemini 3.0 on Tuesday, following much anticipation after a leaked model card and reports of significant improvements. It’s too early to say anything definitive, but it appears to be a strong model.

OpenAI released GPT-5.1 last week, calling it a “smarter, more conversational ChatGPT.” The release seems focused on non-developers using ChatGPT.

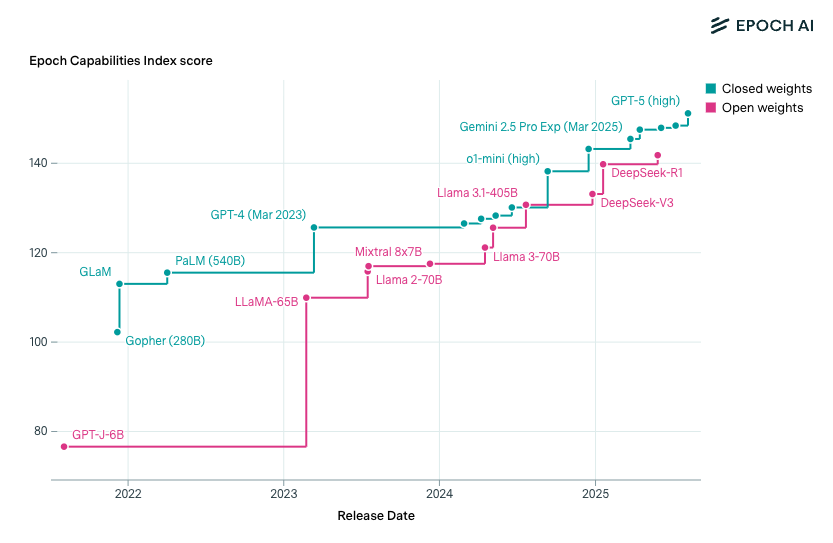

An open-weights model from Moonshot AI, Kimi K2 Thinking, was also released last week. While still rough around the edges, the model uses tools very well and often writes more eloquently than Claude Sonnet 4.5 or GPT 5.1. A few weeks ago, the AI research organization Epoch AI released this graph, arguing that the capabilities of the leading open-weight models are only about three months behind those of closed-weight ones:

This three-month window initially seemed narrow. Our first reaction was that the Epoch Capabilities Index might be too vulnerable to these open-weights releases that may game LLM benchmarks. But Kimi K2 Thinking’s performance makes this “three months behind” heuristic seem more plausible.

TOON

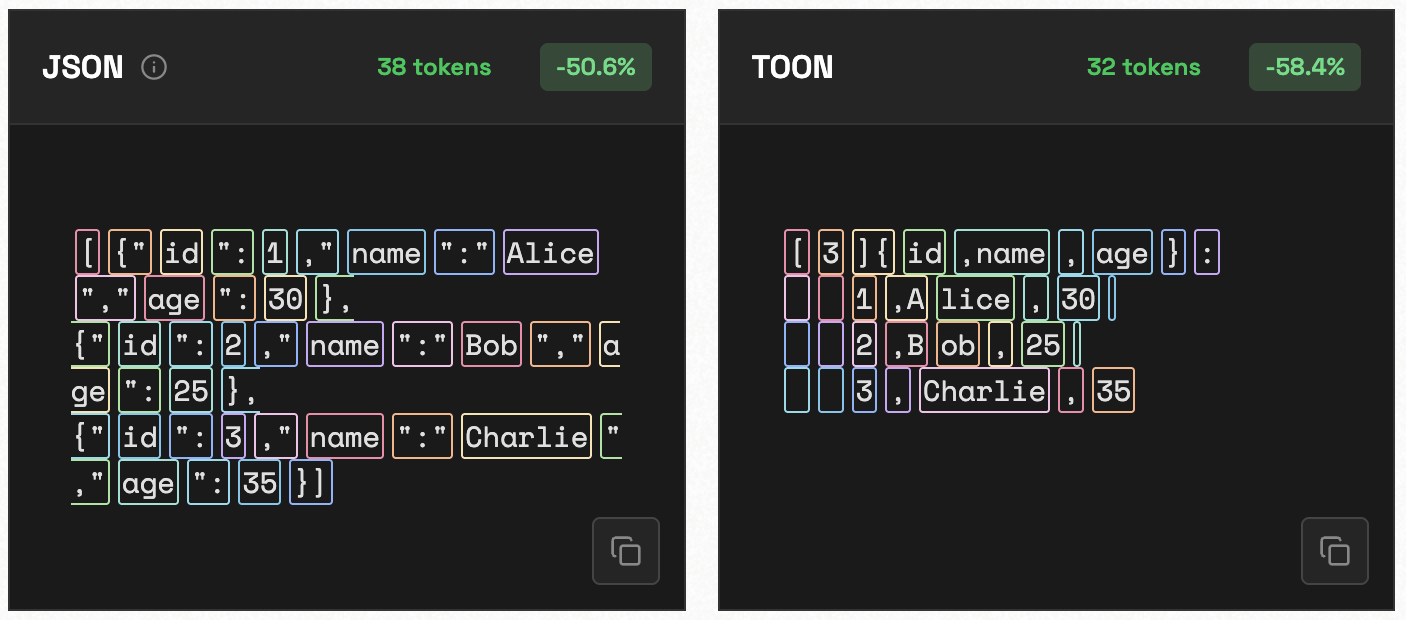

Token-oriented object notation (TOON) is an alternative to JSON that can reduce the token cost of context sent to LLMs. You can see examples and comparisons to JSON (the typical format used to send information to LLMs) here.

TOON has been getting a lot of attention recently. While the idea is appealing, we’d caution against sending large amounts of raw data to LLMs as a general-purpose solution. It’s often better to represent data in ways specific to your problem. For example, the newest ellmer release includes df_schema(), which creates token-efficient representations of data frames. Another approach is to provide LLMs with deterministic tools that help them analyze large amounts of data without placing the full dataset in the context.

Posit news

- A new release of ellmer (0.4.0) is now on CRAN! ellmer makes it easy to use LLMs from R. New features include caching and file uploads for Claude models, support for OpenAI’s more “modern” responses API, web search tools, and better security. chatlas, ellmer’s Python sibling package, is also quickly catching up in functionality.

- The btw package is now on CRAN. btw is a toolkit that makes it easier to build what you want with ellmer. It provides tools to read R package documentation, inspect data frames, perform git operations, and more. These tools are available through multiple interfaces, including an MCP server, an interactive app, and simple function calls for quick copy-pasting.

- In a release post for testthat 3.3.0, Hadley Wickham discussed his experience using Claude Code for R package development.

- We (Simon and Sara) have been investigating an issue where LLMs struggle to accurately interpret plots. We wrote up some initial conclusions in this blog post: When plotting, LLMs see what they expect to see. The post uses the evals from the bluffbench package.

- Many of Posit’s LLM tools can execute code and access your files. What does this mean for privacy? We also wrote a blog post discussing how privacy works with Posit’s LLM tools, arguing that the most important requirement is trust in your LLM provider.

Terms

Tokens are the fundamental units of LLMs. LLMs tokenize content, breaking it down into pieces. Tokenization determines which subsets of characters are regarded as tokens. You can get a sense of how tokenization works by playing around with a tokenizer app, like this one or this one that lets you compare different formats.

Tokenization happens on the model provider’s side right before inference (i.e., when the model takes in the request and generates a response). This means that all the text you send gets tokenized and counts toward your context window.

If you’ve worked with LLM APIs before, you’ve likely seen how LLMs use JSON to pass structured data. JSON is also just text, and the entire JSON content gets tokenized like any other string. Because of this, formats that reduce the amount of text can meaningfully change the token cost of what you send to a model. This is part of what has driven the recent interest in alternatives like TOON, discussed above. Below is a comparison of JSON and TOON formats for the same text. Note the different token counts.

Learn more

- This writeup from Google DeepMind describes “Consistency Training,” where models are trained to provide the same response to two prompts: one neutrally phrased and another containing sycophantic cues or jailbreaking attempts.

- A new paper puts numbers to “belief shift,” the phenomenon where LLMs’ stated beliefs change during long conversations.