2025-10-10 AI Newsletter

External news

Claude Sonnet 4.5

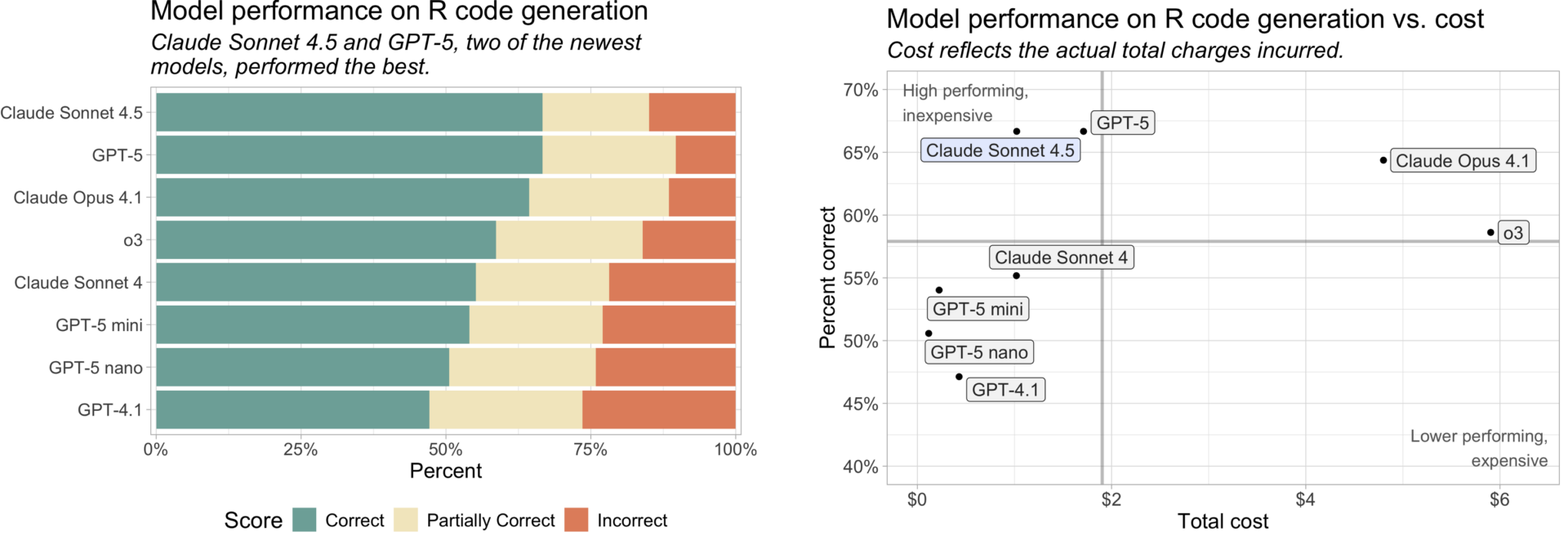

Last week, Anthropic released Claude Sonnet 4.5, calling it “the best coding model in the world.” It seems to be a highly capable coding-focused model, and is likely slightly better than OpenAI’s recently released GPT-5-Codex. Anthropic’s previously most capable coding model, Opus 4.1, is around five times more expensive than the Sonnet models.

We’ve updated our R code evaluation to include Claude Sonnet 4.5. As shown below, Sonnet 4.5 tied with GPT-5 in percentage correct while being slightly cheaper. You can read more about our evaluation process in this earlier blog post.

Google is expected to release an update to its coding model, Gemini Pro 2.5, soon. This means that all three major AI labs will have launched new coding models within just a few months.

Practically, this means you can expect pretty comparable performance across the latest models from the major providers for coding tasks. We generally recommend starting with the best model you have access to, and later trying cheaper models if they meet your needs. That’s also why we initially supported only Anthropic’s Sonnet series in Databot.

Investments in and from OpenAI

In the last few weeks, OpenAI announced several investments of unprecedented scale:

- Nvidia will invest up to $100 billion in OpenAI to deploy 10 gigawatts of computing capacity using Nvidia chips (for reference, one gigawatt can power 100 million LED lightbulbs).

- OpenAI, Oracle, and SoftBank will jointly build five new data centers under Stargate, OpenAI’s infrastructure initiative, bringing OpenAI’s planned spending on Stargate to over $400 billion over the next three years. For comparison, the U.S. interstate highway system cost roughly $600 billion (in today’s dollars) over 36 years.

- OpenAI and AMD announced a deal securing 6 gigawatts of capacity powered by AMD chips, lifting AMD’s stock price.

These deals are notable not only for their size but also for their circularity, with OpenAI, Nvidia, and Oracle investing in each other and then spending that money on each other’s products.

More broadly, AI has become a dominant force in capital markets. As of August 2025, over 35% of the S&P 500’s total weight is concentrated in seven large tech companies, many of which are heavily invested in AI. This reflects massive capital concentration in AI initiatives whose long-term return on investment remains relatively uncertain.

Posit news

- Isabella Velásquez’s blog post posit::conf(2025) Recap highlights several AI-related announcements shared at this year’s conference. Recordings of all posit::conf(2025) talks will be publicly available in December.

- Learn how each edition of this newsletter comes together in Simon’s new post on his personal blog.

Terms

One headline-grabbing aspect of Claude Sonnet 4.5’s release was the finding that, during safety testing, the model showed a high degree of situational awareness. During many evaluations, the model realized that it was being evaluated (you can read more about this in Section 7.2 of Sonnet 4.5’s system card).

One concern with models realizing they are being evaluated is that they might simulate alignment with tester values during testing, but behave differently once deployed. Another is that, if we penalize models for explicitly acknowledging this awareness, future models may simply learn to hide it.

One way around this is to make testing resemble real-world use as closely as possible, so we can be confident the model behaves similarly whether or not it knows it’s being evaluated. It’s less concerning for a model to confuse real-world use with testing than to alter its behavior once it realizes it’s being tested.

Learn more

- Situational Awareness is also the title of a write-up by a former OpenAI researcher on artificial general intelligence (AGI). The title is a play on its usual usage in the field; alignment faking is not the subject of the write-up.

- Chris Loy’s blog post The AI Coding Trap is an insightful reflection on coding with AI agents.

- You can see product announcements and talks from the recent OpenAI Dev Day here.