AI Newsletter 2026-01-30

External news

In November, a Claude user extracted a document from Opus 4.5 that the model called its “soul document.” The document was not a system prompt, but instead appeared to be used during model training, and described the essence of Claude’s “character.”

Last week, Anthropic released a version of this document, calling it “Claude’s constitution.” Claude sees the document many times throughout training, and it exhaustively details the characteristics, values, and behaviors that Anthropic wants Claude to have. There’s a nice interview with the primary author of the document, Amanda Askell, on a segment of the Hard Fork podcast.

The constitution states that Claude’s primary values should include being safe, ethical, helpful, and compliant with Anthropic’s specified guidelines. Some interesting parts from the constitution and accompanying writeup (all emphasis ours):

- Although the constitution does provide a lot of detail about what it means to be helpful and ethical, it also encourages Claude to rely on its own moral reasoning: “We don’t want to assume any particular account of ethics, but rather to treat ethics as an open intellectual domain that we are mutually discovering”

- In the writeup, the authors mention that they think models need to understand the why behind behavioral guidance: “We think that in order to be good actors in the world, AI models like Claude need to understand why we want them to behave in certain ways, and we need to explain this to them rather than merely specify what we want them to do.”

- The constitution tells Claude it may be subject to moral concern: “Claude’s moral status is deeply uncertain…We are not sure whether Claude is a moral patient, and if it is, what kind of weight its interests warrant.”

- On introspection: “Claude exists and interacts with the world differently from humans:…[it] may be more uncertain than humans are about many aspects of both itself and its experience, such as whether its introspective reports accurately reflect what’s actually happening inside of it.”

Posit news

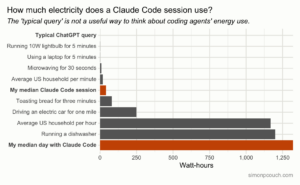

Electricity use of AI coding agents

We’ve written before in this newsletter about AI and the environment. In short, if you’re just chatting with LLMs on the web, the electricity and water usage is likely a rounding error on your total consumption. That said, over the last year, compute-intensive LLM tools have become increasingly popular, including coding agents like Claude Code that can kick off hundreds of larger-than-average queries per session.

Simon compared the electricity use of coding agents to that of a typical query. In a recent blog post, he estimated that his average day with Claude Code (~50 five-prompt sessions) consumes 4,400 “typical queries” worth of electricity, analogous to running an additional dishwasher load per day or keeping an extra refrigerator running:

How well do LLMs interpret plots? (Part 2)

Previously, we discussed how LLMs often fail to interpret plots that contradict their expectations. In a follow-up post, we look at how well LLMs interpret plots under normal, non-adversarial conditions, as well as try a host of interventions to solve the interpretation issues.

ragnar 0.3.0 available on CRAN

ragnar is a toolkit for building retrieval-augmented generation (RAG) workflows in R. The new version includes faster ingestion of corpora, support for new embedding providers, and more. Read the release blog post here.

Terms

LLM training occurs in two main stages. In pre-training, the model learns language by predicting the next word across massive amounts of text, providing the model with its general knowledge and capabilities. Then, in post-training, developers more directly steer the model’s behavior by providing the model with tasks and rewarding it if it completes the task successfully. This process is called reinforcement learning.

It seems that Claude’s constitution mostly comes into play in the post-training stage, when the model encounters situations where the constitution might be relevant, and responses that follow the constitution are counted as successes.

Learn more

- Anthropic CEO Dario Amodei’s 2024 essay “Machines of Loving Grace,” which focused on the upsides of AI, was widely discussed. You might have heard the line “country of geniuses in a datacenter,” which is from that essay. Amodei just released a follow-up, “The Adolescence of Technology,” that’s more focused on AI’s risks.

- Wes McKinney wrote about how coding agents affect language preferences.