Evaluating the MLOps readiness of your team

Picture yourself finishing a project where you carefully tuned and trained a machine learning model using data important for your organization. Is that the end of the model’s life cycle? Oftentimes not, because that model needs to be put in production.

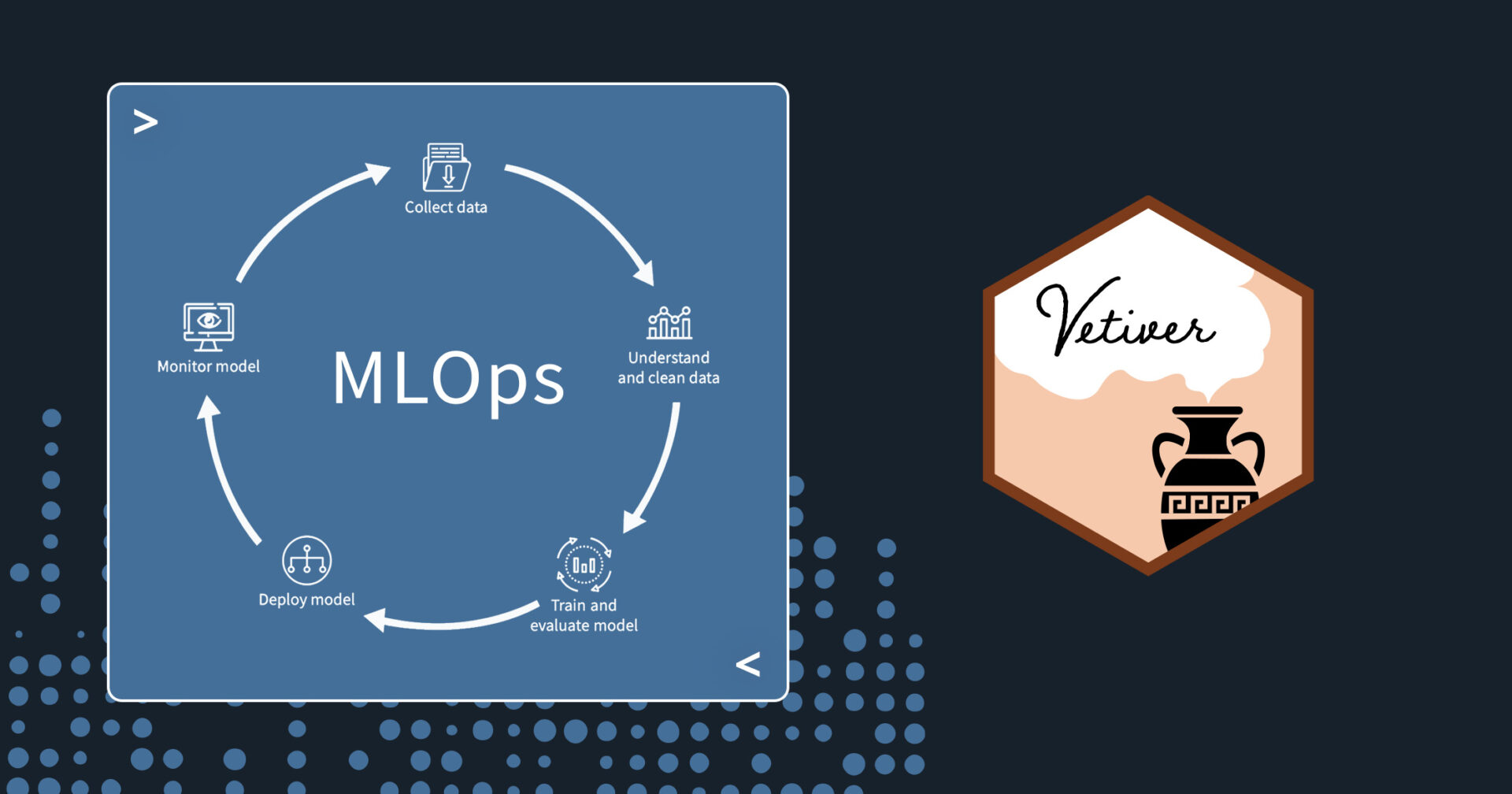

Machine learning operations, or MLOps, is a set of practices to deploy and maintain machine learning models in production reliably and efficiently. If you develop models, you can use these practices for tasks like versioning, deploying, and monitoring models. However, there is no one-size-fits-all MLOps strategy, so it can be difficult to know if your team would benefit from these practices.

Who needs MLOps?

In terms of MLOps maturity, it is still early days for many organizations and practitioners. Your team might be ready for machine learning operations if you have questions such as:

- Where are our models stored?

- How do I share my model with the rest of my organization?

- How can I use a model without importing it and reproducing its environment?

- What is our process or cadence to retrain deployed models with new data?

- How do I integrate my model into a workflow orchestration system?

- How can I monitor model performance over time?

When deciding if your team needs to begin the adoption of MLOps practices, two points to consider are the quantity and velocity of your models. Teams with a small number of models or models that are not updated very often may not see benefits from adopting MLOps practices. In those situations, one-off processes to share or deploy models will not impede your organization’s productivity much. However, teams with large numbers of data scientists working together, teams with many models to manage, and teams with models that are updated frequently will gain from MLOps.

How does MLOps help?

First, MLOps practices help with better model management. Versioning a model can help when you have many models to track. It allows you to store the model binary along with structured metadata for context on features, model size, descriptions, or other custom fields. Versioning makes it easy to iterate on new models or roll back to previous versions.

MLOps practices help with model collaboration. One data practitioner can deploy a model as a REST API, and others can use the API endpoint to make predictions, just like a model in memory. This allows practitioners to spend more time thinking about the outputs of the model rather than loading a model and reproducing the environment it was trained in.

MLOps practices help with model relevancy. A model can return predictions without error, even if it is performing poorly. Over time, input data may shift in statistical distribution, or the relationship between the input features and outcome may change. Without monitoring this performance drift, it is difficult to diagnose if your model is still relevant to your current data.

Where can I start with MLOps?

The vetiver framework is uniquely positioned for teams getting started with MLOps tasks. The vetiver framework does not aim to be an all-in-one solution for all parts of the MLOps life cycle. Instead, vetiver provides tooling to version, deploy, and monitor ML models. This focus allows vetiver to provide a better user experience and composability as organizations mature in their MLOps needs.

If you’re looking to get started with MLOps, take a look at the vetiver documentation, or check out the MLOps with vetiver in Python and R webinar for a more in-depth example.