Posit x Databricks: December ’23 Webinar Recap

In 2023, Posit and Databricks announced a number of upcoming integrations designed to simplify the lives of joint users. In early December, we presented a joint webinar to showcase the progress we’ve made toward these integrations over the past several months. The following is a brief summary of what was included in that webinar, along with a list of questions and accompanying answers submitted during the webinar.

Integration Overview

As announced in the initial partnership post, there are three current areas of focus for Posit and Databricks:

- An improved Posit Workbench experience for Databricks users

- Improved connection options via sparklyr and odbc

- Providing access to Posit products in the Databricks Marketplace

Posit Workbench and Databricks

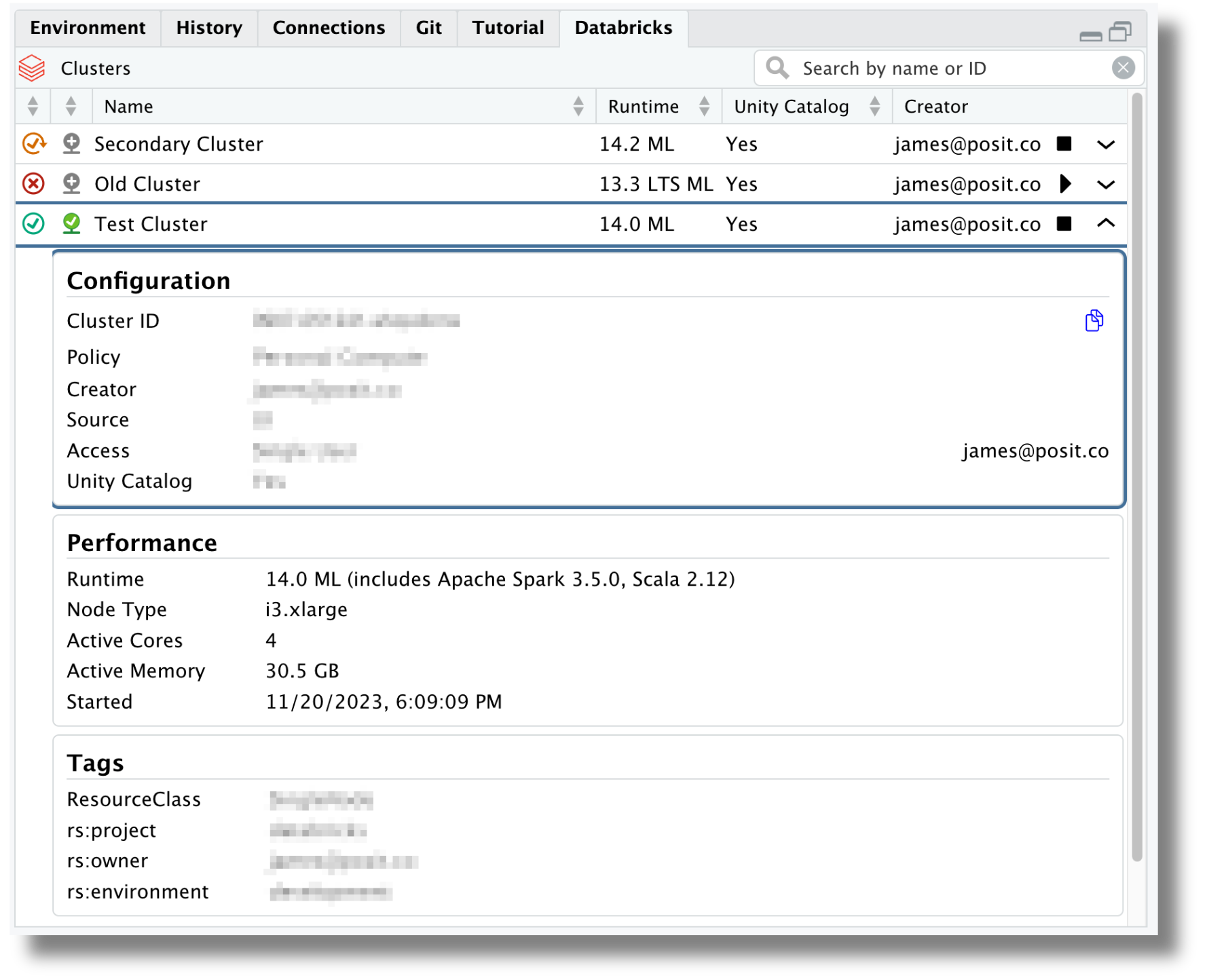

The recent release of Posit Workbench includes two specific enhancements for Databricks users: delegated Databricks credentials and a new Databricks UI within the RStudio IDE. With this release, users can log in to a given Databricks workspace when they start an RStudio or VS Code session and then interact directly with the clusters in that Workspace from their preferred environment.

Databricks connectivity

Recent updates to the sparklyr package and the introduction of pysparklyr provide support for connecting to Databricks via Databricks Connect v2. These package developments enable R users to connect to and interact with Databricks data, including data on Unity Catalog, directly from their R session. Built-in support for the RStudio Connections Pane allows users to browse available data directly from the RStudio IDE.

We also recently released an update to our Pro Drivers that includes an ODBC driver for Databricks. This driver can be used with the new databricks() function from the odbc package to connect to Databricks clusters and SQL warehouses. The databricks() function will also work with the ODBC driver provided directly from Databricks.

Databricks Marketplace

Both Posit and Databricks are committed to providing access to Posit products directly within the Databricks environment. This work is currently taking shape and will be delivered as Databricks Lakehouse Apps become available. Stay tuned for future updates in this area.

Webinar Questions

The following questions were asked during the joint webinar on December 5, 2023.

Is it good to have Posit Package Manager so that every developer can share packages installed in R or Python and packages don’t need to be installed for every developer?

Posit Package Manager can provide a number of advantages for Databricks users. It provides access to historical package snapshots which can aid in reproducibility and it also supports pre-built R package binaries for Linux, which can dramatically reduce the amount of time necessary to install packages into a Databricks compute environment. Posit Package Manager can also be used to distribute internally developed packages across the organization.

Can VS Code be run on a local PC and still connect to Databricks?

Yes, a local installation of VS Code can connect to Databricks via Databricks Connect v2 and the Databricks extension for VS Code.

Is the Databricks extension available in Posit Cloud also, or only in Posit Workbench?

The Databricks pane in RStudio will be available in an upcoming update to posit.cloud.

Do cluster policies still apply in the IDE?

Yes, any cluster policies that are in place will be respected when connecting to a Databricks cluster using either Databricks Connect v2 (for example with sparklyr) or when connecting via ODBC.

Can you write to a Unity Catalog table from R in the format of catalog.schema.table?

This is something that is currently being worked on and tracked in this GitHub issue.

If a Databricks cluster has Python libraries installed that aren’t available in the runtime, will those be included in the environment created in Workbench?

When sparklyr connects to Databricks, it first queries the cluster to determine the version of DBR. Then it ensures there’s a local Python environment that meets the requirements of that DBR version and, if none is found, prompts for installation.

What is the best practice for debugging code that ran (or failed to run) in Databricks from RStudio?

If you’re running interactively in RStudio you can use the browse() function. If you’re running scripts in batch, you can use print() statements.

Will this setup work if a workspace is not integrated with Unity Catalog?

Yes, but if you need to connect to Databricks for SQL only and you don’t have Unity Catalog, we recommend using the ODBC drivers and the new odbc::databricks() function.

Will the ODBC Databricks function be available via open source?

Yes, the odbc::databricks() function will be available to all users of the odbc package.

Does the Databricks odbc driver handle things like spark_ml library calls or is it only more simple data pulls?

The ODBC driver does not support spark_ml library calls. For ML type operations we recommend using the sparklyr package and its growing ML functionality.

Is it possible to use compute via Databricks environment from Posit Connect (with a Shiny application)?

Yes, this can be accomplished with either an ODBC or sparklyr connection from the Shiny app. For interactive applications like Shiny, we recommend using ODBC to simplify the connection requirements and dependencies.

It was shown that it’s able to figure out missing packages in Workbench which are in the Databricks cluster and install those in Workbench. Does it also work the other way around?

Neither sparklyr nor ODBC communicates any information about package dependencies to Databricks. Package management on Databricks is a separate task.

How would you deal with a Shiny app that needs an OAuth connection to Databricks, not a PAT?

Currently, applications hosted on Posit Connect that interact with Databricks require a PAT to be included in the content as an environment variable.

Would we be able to trigger Jobs using the Job launcher in Databricks?

This is something that the Posit Workbench team is looking into for 2024.

Can we interact with Databricks on RStudio if we don’t have Posit Workbench?

You can use sparklyr or the Databricks ODBC drivers to connect and run code on Databricks from RStudio (or any R environment) as well as leverage the Connections pane in RStudio. The only features that are specific to Posit Workbench are the Databricks pane in RStudio and delegated Databricks credentials.

When you run VS Code locally connecting to Databricks, can you run code in chunks rather than the entire file?

Not today, though there is notebook support that would give you similar functionality. Databricks is working on ways to run all code on Databricks from your local VS Code session.

For unstructured data, Databricks Connect in Python allows you to copy files to unity catalog volumes via copyFromLocalToFs. Do you plan on also implementing it for the other way, e.g., copyFromFsToLocal?

This capability will be available in R through the R SDK for Databricks via the Volumes API.

How are keys and credentials handled inside the Databricks UI for our processes?

When a Posit Workbench session is launched and a user signs in to a selected Databricks workspace, a set of credentials is stored on disk and periodically refreshed by Workbench so that they do not grow stale. These credentials are stored in such a way that common Databricks SDKs and tools know how to use them for authentication.

Right now, within Databricks you have the ability to spin up an RStudio IDE instance but you can’t turn off the cluster that it’s running on and other quirks. Are there any enhancements on that?

We are aiming to deliver a solution that solves all of the issues with the current RStudio IDE integration on Databricks. Support for DB Connect v2 in sparklyr is step one and the Posit Workbench enhancements are step two. The next step is hosting Posit Workbench on Lakehouse Apps, which will be designed to eliminate the issues that exist with hosted RStudio on Databricks today. This is still a work-in-progress and more details will be shared over time.

Learn More

If you’d like to see a demo of the functionality discussed above and hear more about the future of the Posit and Databricks partnership, we encourage you to review the webinar recording. Have additional questions about Posit and Databricks? Our team would be happy to chat with you.