Why Shiny for Python?

Get our email updates

Interested in learning more about Posit + Python tools? Join our email list.

With Shiny for Python out of alpha as of April, many have wondered how it stacks up against other popular alternatives. In this article, we’ll explore the design philosophy behind Shiny for Python and how it compares to other frameworks for developing data science web applications. If you are a data scientist working mostly in Python, we hope this motivates you to take a serious look at Shiny for Python.

Shiny for Python

Shiny optimizes for programmatic range. You can build quickly with Shiny and create simple interactive visualizations and prototype applications in an afternoon. But unlike other frameworks targeted at data scientists, Shiny does not limit your app’s growth. Shiny remains extensible enough to power large, mission-critical applications.

The main feature that differentiates Shiny from other frameworks is reactive execution. Reactive execution has two main benefits. First, Shiny can minimally rerender components which greatly speeds up your application and improves the user experience. Second, Shiny infers what needs to be rerendered without requiring you to write explicit callbacks, simplifying your code as your app grows in complexity.

For example, consider the computation graph below. We have two plots produced by randomly sampling a dataset and a checkbox to change the scale of one of those plots. If we change the plot scale, only the relevant plot will rerender. We don’t read the dataset in again or take a new random sample. Shiny automatically does the correct thing without forcing you to explicitly cache data or write callback functions.

Computation graph for a simple application with Shiny.

Streamlit

The promise of Streamlit is to be the simplest way to transition a Python script or notebook into a web application. However, Streamlit’s execution means that it can’t really fulfill that promise for complex, real-world problems.

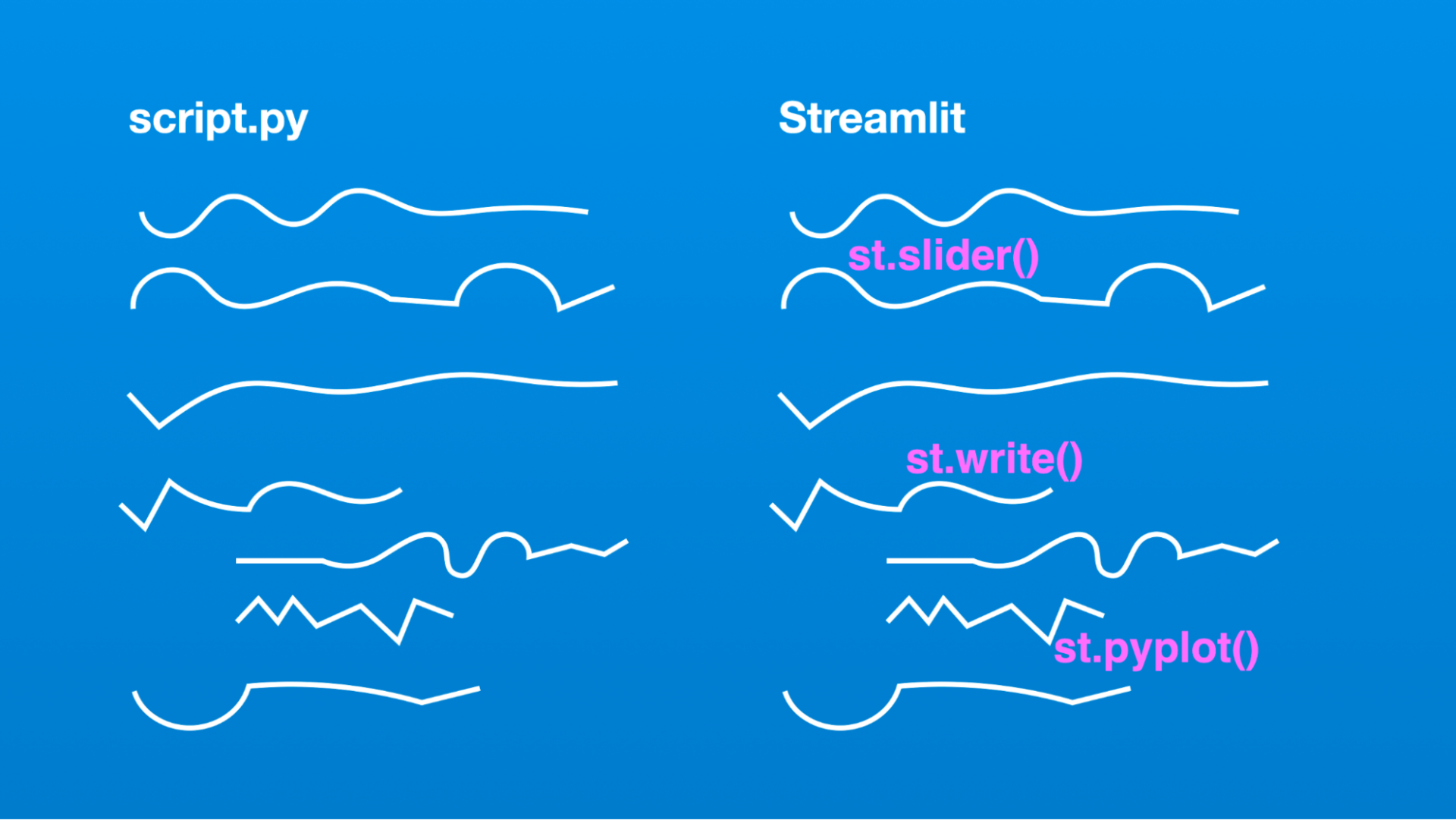

Streamlit’s architecture allows you to write apps the same way you write plain Python scripts. To unlock this, Streamlit apps have a unique data flow: any time something must be updated on the screen, Streamlit reruns your entire Python script from top to bottom.

– Streamlit documentation, Main concepts

Streamlit tries to achieve this simplicity by rerunning the application code from start to finish whenever any input in the application changes. This top-to-bottom strategy is great for getting started because, usually, your initial script was written to be run from top to bottom. The cost of this strategy is that it’s inefficient for many applications and intractable for large ones.

The sampling application set up in the Shiny section illustrates this problem. A naive Streamlit implementation will lead to a new sample being taken every time we change the plot scale, which is inefficient and incorrect. Usually, when working with sampled data, we want to take a single sample and use downstream options to interrogate the qualities of that sample. If we take a new sample every time the plot scale changes, we will mislead the user about the qualities of that sample.

In a naive Streamlit app, each time your user interacts with the page the app is fully re-rended from top to bottom. Shiny infers these relationships, and how to minimally re-render the parts of the app required given user interaction.

The typical way people solve this problem is to use caching as a control-flow strategy. If we cache the value of the sampled data, then changing the plot options won’t cause the data to be resampled. This works but creates a new set of problems:

- Not everything can be serialized, so we need to decide between two caching strategies based on the data we’re trying to cache. Streamlit provides some great documentation on this, but there are still more than 14 different cases that can affect which caching strategy to use. You need to be aware of these cases because the wrong caching strategy can cause your application to behave unpredictably.

- Caching is not free. Serializing large objects and storing them in memory can cause performance problems or crash our app.

- We need to worry about cache invalidation. For example, if you were to speed up an expensive database query by caching the result of that query your app may return stale, cached data if the data in the database changes.

These problems are not the end of the world if your application is small enough, but as it gets bigger and more complex, caching becomes an inadequate control flow strategy.

Streamlit is easy, until it isn’t

Initially, Streamlit is a convenient option to transform your Python script into a web application with minimal effort. And you’ll usually get a very nice-looking app for your effort. Streamlit is great if your app is simple, and you know it will stay simple, but its design creates a lot of headaches as your app grows.

As you gain experience as a Streamlit developer, you’ll find yourself resorting to caching, or manually manipulating session state to extend and maintain your app. These techniques can put you in a dangerous place as a developer because they can introduce complex and subtle bugs that are challenging to diagnose. This leads many data scientists to offload computational complexity to systems like Snowpark or Airflow, switch to a more robust framework, or outsource app development entirely to another team.

We believe Shiny for Python is a happy alternative. Shiny is as easy to program as Streamlit for most applications, but it can also handle large mission-critical applications. Your first app should only take a few hours to get up and running. As your app becomes more complex, Shiny’s reactivity helps it continue to run quickly and is easy to maintain. You’ll be working with a framework designed to scale with you.

Dash

Dash is optimized for deployment efficiency. The idea behind a Dash app is that every component is independent of every other component. This means that you can have multiple processes or servers render parts of the app. From a deployment perspective, this is extremely appealing because it is easy to scale the app horizontally.

The cost of these easy deployments is that Dash requires you to write stateless callbacks. Rather than relying on global variables or stored intermediate computations, each component of an app must be independent and self-contained. Writing Dash apps can be difficult because it differs from how you probably write programs when executing them locally. To implement the sampling example above, we would need to either have one callback that generates both plots or two callbacks which each read in the dataset.

Additionally, modern deployment practices have somewhat undermined the appeal of stateless applications. If you can use a container orchestration system like Kubernetes, you can get very efficient deployments even if your application is stateful. If you deploy Shiny using Kubernetes, or Streamlit using Snowflake, your deployment will probably be about as cost-effective and fault-tolerant as a Dash app.

Shiny’s Design Philosophy

Shiny for Python strives to be the best web application development framework for data scientists. It does this by striking the right balance between simplicity and power.

It’s very easy to get started and create simple Shiny applications. Our interactive ShinyLive site provides many examples.

What sets Shiny for Python apart from other application frameworks targeted at data scientists is that Shiny apps can grow to elegantly handle complicated problems. Other frameworks focus on a narrow scope of problems and are hard to use when your problem falls outside of that scope. They either stop you in your tracks or force unfortunate tradeoffs in terms of performance and code complexity. These limitations mean that simple applications often need to be refactored by a “real” web developer to realize their full potential.

Shiny strives to eliminate that problem by giving you a few tools and concepts which are used to solve a wide range of business problems:

- Shiny’s defaults create performant attractive applications.

- Reactivity makes large applications run quickly and efficiently.

- You can use modules to encapsulate, reuse, and share elements of a Shiny application.

- The framework fully supports CSS and Javascript customization.

- Server side state makes it simple to pass calculations between rendering functions.

Shiny offers a framework that provides a smooth path from small-scale prototypes all the way to robust data science web applications.

Get Started with Shiny for Python

Learn more about Shiny for Python on its website, shiny.rstudio.com/py. A good place to get started is on the Learn Shiny page, and playing with the many example apps on ShinyLive.

We would love to see what you make! One of the best parts of developing R-Shiny has been the outpouring of support from the community, sharing their Shiny apps online, in conferences, and at local meetups, and developing extensions that unlock new functionality. We encourage you to share your work online using the #PyShiny tag on social media.

You can report bugs and feature requests on the GitHub issues page, github.com/rstudio/py-shiny. Please reach out to us if you have any questions.

Posit PBC also supports several professional tools to aid R and Python data scientists teams. These include:

- shinyapps.io to deploy your Shiny for Python applications. Learn more about deploying Shiny applications on the Shiny website.

- Posit Workbench is our premier development experience for data science teams who use R and Python. Code in your preferred environment, collaborate with other data scientists more effectively, and access enterprise features like centralized management, security, and commercial support.

- Posit Connect helps teams publish everything they create in R & Python, including Shiny, Streamlit, and Dash applications, reports, notebooks, and dashboards. Automate code execution so your data products are always up to date.