2025-12-19 AI Newsletter

Year in review 2025

The AI world moves quickly, and much has happened this past year! We’re feeling festive, so today’s newsletter includes a look back at the year in AI and data science, both at Posit and in the wider world.

By the start of 2025, OpenAI’s best “daily-driver” model was GPT-4 and Anthropic’s was Claude 3.5 Sonnet. Google Gemini was well behind the frontier. Anthropic had recently released the Model Context Protocol and it was picking up steam. VS Code Copilot Chat was the closest thing to a mainstream coding agent.

January 9th: The first release of ellmer, an R package that makes it easy to interact with LLMs in R, hit CRAN.

January 27th: R1, an open-source reasoning model from Chinese lab DeepSeek, prompted a selloff of AI-related stocks. Some misconceptions about the model’s training costs, plus some innovations in UI, prompted many to fear US labs would soon lose their position at the frontier.

February 24th: Anthropic publicly released Claude Code, along with Claude 3.7 Sonnet. The “coding agent in the terminal” concept was a defining trend in 2025, with OpenAI and Google Gemini releasing their own takes on the concept in April and June, respectively.

March 24th: Posit released chatlas, ellmer’s Python sibling.

March 25th: Google’s release of Gemini 2.5 helped move Gemini back up to the frontier.

June 3rd: Posit announced Positron Assistant, a coding agent for Positron.

June: Posit released various companion R packages for ellmer on CRAN: vitals (LLM evaluation), ragnar (Retrieval Augmented Generation), and mcptools (Model Context Protocol).

August 28th: Posit announced Databot, an exploratory data analysis assistant.

November: A flurry of frontier models was released heading into Thanksgiving, including Claude Opus 4.5, Gemini 3 Pro, Gemini 3 Pro Image (aka Nano Banana Pro), GPT 5.1 Pro, GPT 5.1-Codex-Max, DeepSeek V3.2, Grok 4.1. The releases from Anthropic, Gemini, and OpenAI are our current state-of-the-art.

We have some big updates to share early in the new year. We’ll take January 2nd off for the holidays, but are excited to pick back up in a month!

External news

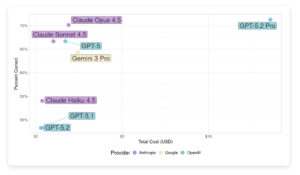

OpenAI released GPT 5.2 last week to mixed reactions. Some benchmarks were quite impressive, but users have generally reported “weird” and unpredictable behavior. You can see how it stacks up against other models we’ve benchmarked in our R code evaluation:

Posit news

If you’re interested in trying out an experimental AI product in RStudio, you can join the beta testing waitlist.

We published a blog post evaluating whether local models can be used effectively in coding agents. Our take: models small enough to run on a laptop aren’t yet capable enough to power tools like Positron Assistant or Databot.

chores 0.3.0 is now on CRAN. chores is an R package that provides a library of ergonomic LLM assistants designed to help you complete repetitive, hard-to-automate tasks. Working on the release helped Simon realize that LLMs you can run on your laptop are stronger at instruction-following than he had expected.

Terms

Keeping with the “year in review” theme, we thought we’d take a look back at all the terms we’ve defined so far in our newsletter.

- Training and inference – Training occurs when large corpora of data are used to create an LLM. Inference occurs when a trained model generates an output from an input.

- Prompt injection – A type of attack against LLM applications where an attacker provides input designed to trick the model into behaving in unintended ways.

- Tool calling and agents – Tool calling allows LLMs to interact with external systems. An agent uses tool calling in a loop to gather information about the world and make changes to it.

- Situational awareness – When a model is aware of its environment, particularly relevant when it realizes it is being evaluated and alters its behavior as a result.

- AGI – Artificial general intelligence. Artificial intelligence that matches or exceeds human intelligence across a wide range of tasks.

- Emergent capability – A behavior that arises from the bottom-up, complex processes of a system rather than through explicit design, such as introspection in LLMs.

- Tokenization – The process of breaking text and other inputs into tokens, the fundamental unit of information processed by LLMs.

- Consumer and API pricing – Consumer pricing is typically a flat subscription fee that lets you use a set of applications from the provider. API pricing is for programmatic use where you pay based on your token usage.

Learn more

- In a speech at the University of Washington, Cory Doctorow made several critiques of AI that many of us found resonant.

- The New York Times published an interesting article on the distinctive (and much-hated) style of AI writing.

- The Linux Foundation announced the formation of the Agentic AI Foundation (AAIF), along with three founding donations: the Model Context Protocol (MCP) from Anthropic, AGENTS.md from OpenAI, and goose from Block. AAIF’s stated mission is to provide “a neutral, open foundation to ensure agentic AI evolves transparently and collaboratively.”